|

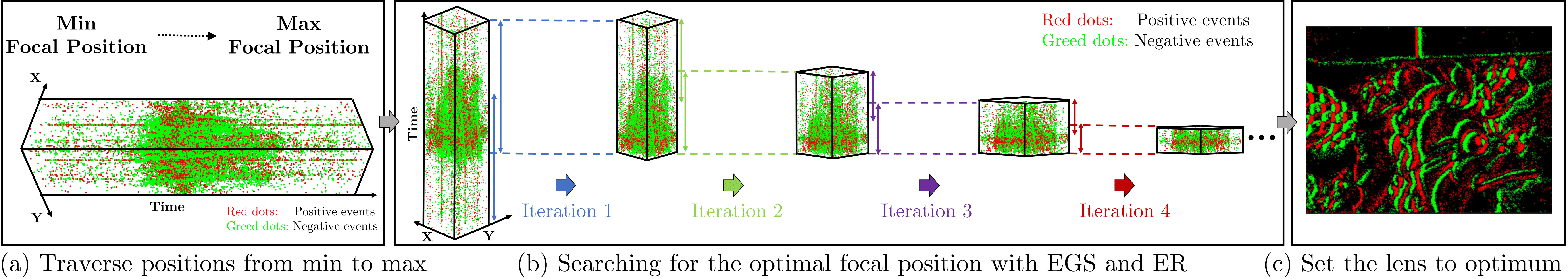

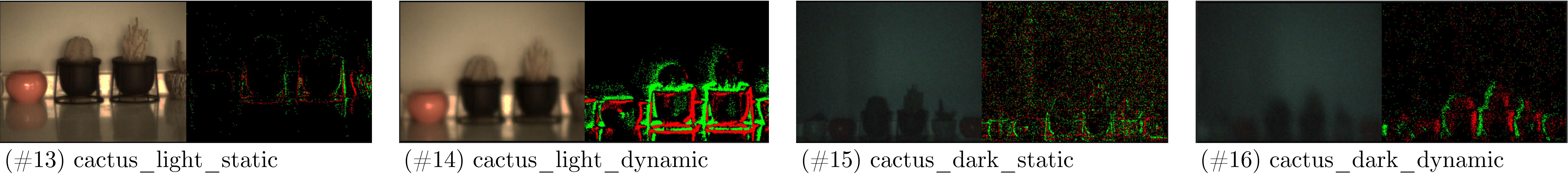

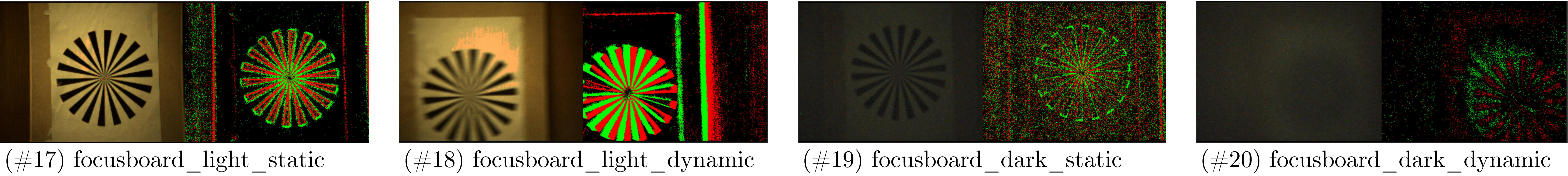

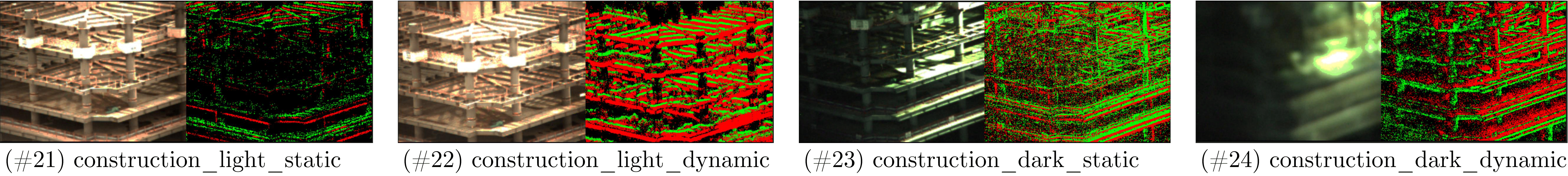

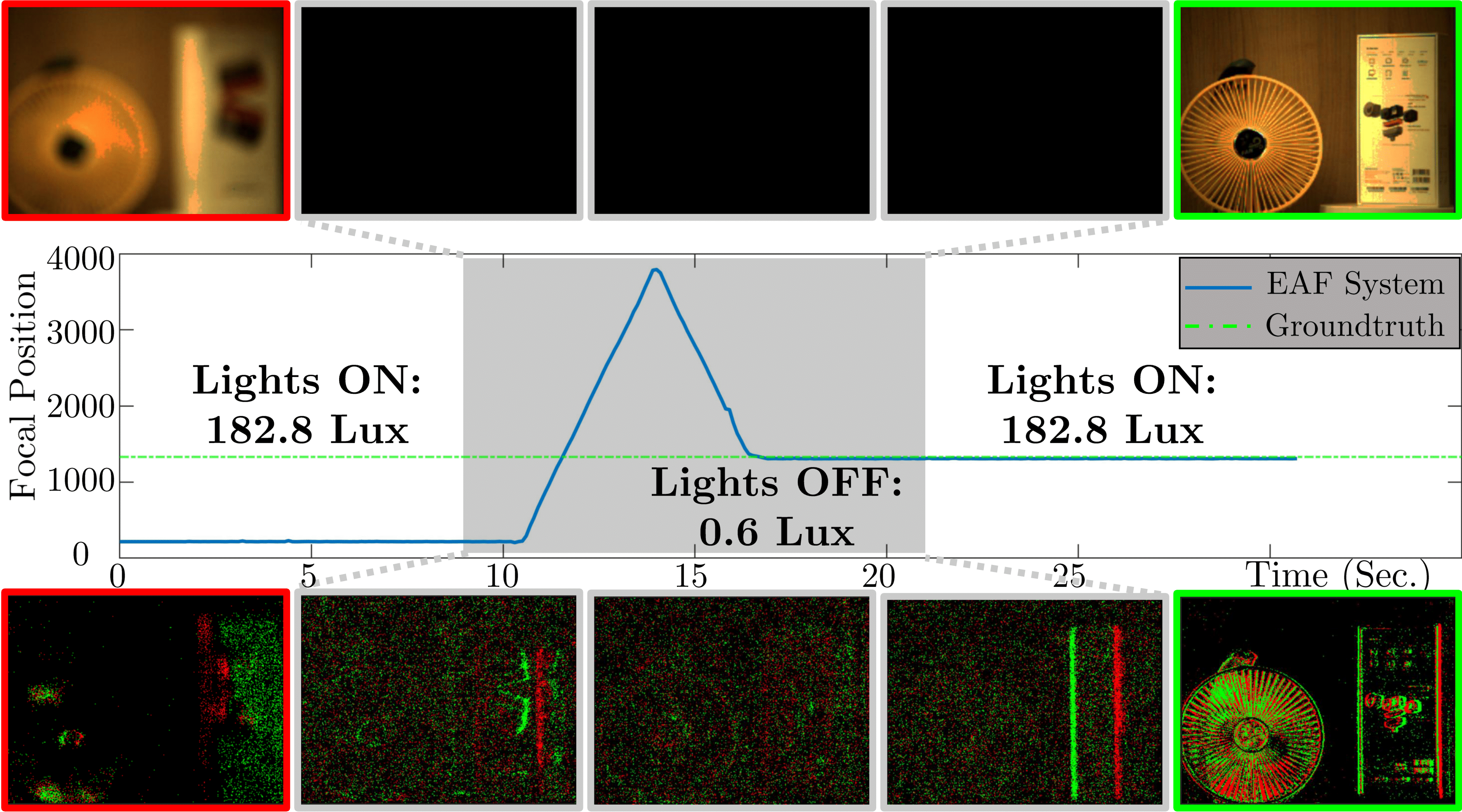

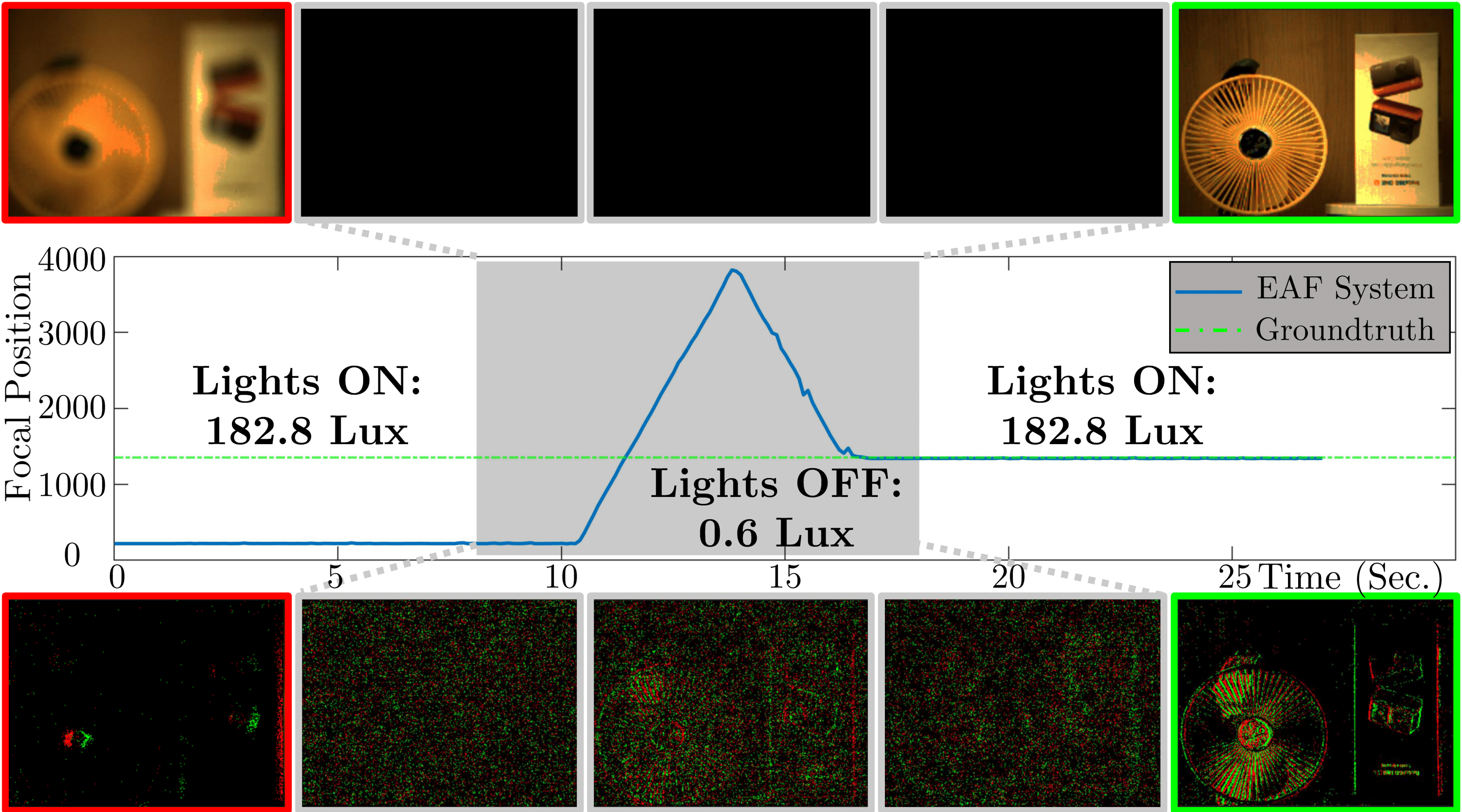

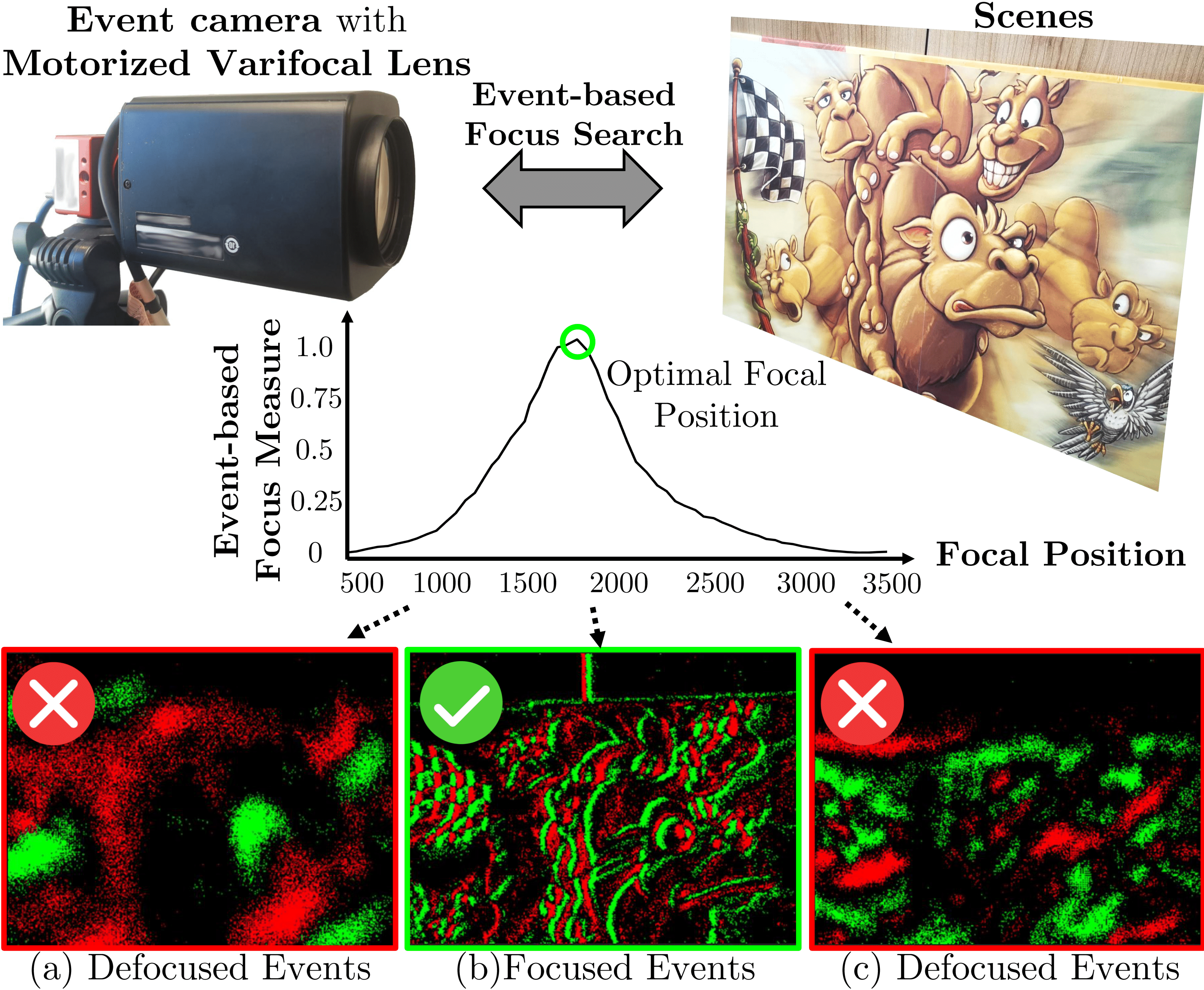

| Our event-based autofocus system consists of an event camera and a motorized varifocal lens. It leverages the proposed event-based focus measure and search method to focus the camera to the optimal focal position. When appropriately focused, the event camera's imaging result (b) is sharper and more informative than (a), (c) where it is defocused. |